Enhancing Feature Tracking Reliability for Visual Navigation using Real-Time Safety Filter

🚀 Making Visual Navigation More Reliable

Enhancing Feature Tracking with a Real-Time Safety Filter

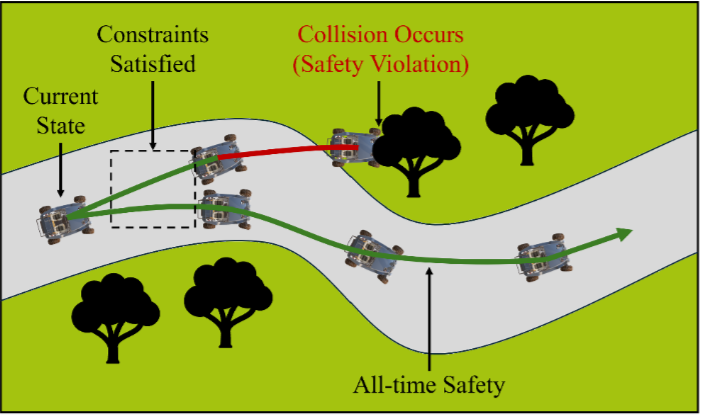

In environments without GPS, many robots rely on visual SLAM — using a camera to track their position by detecting and following visual features (like corners or edges). But here’s the catch:

🔍 If the number of visible features suddenly drops, SLAM performance can fail dramatically — causing tracking loss, localization errors, or even crashes.

Our work addresses this with a simple question:

Can we make a robot proactively preserve its feature visibility — before it’s too late?

📈 Why It Matters

- 🎯 SLAM safety: Prevents catastrophic failures due to sudden feature loss.

- ⚡ Real-time: The filter runs fast enough to be deployed on real robots.

- 🧠 Minimal intervention: Only adjusts motion when necessary — keeps nominal behavior otherwise.

🎯 Key Idea: A Real-Time Safety Filter for Features

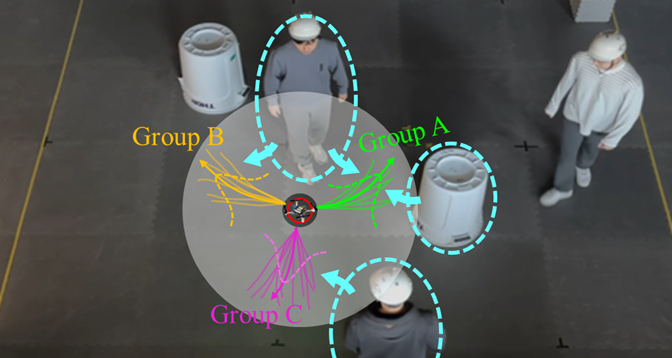

We propose a real-time safety filter that runs alongside the robot’s control system. Instead of blindly following a planned velocity, the filter modifies the velocity to keep enough visual features in field of view (FoV).

🔧 How Safety Filtering Works (In Simple Terms)

Here’s what happens at each time step:

- A controller gives a reference velocity (

v_ref) to the robot. - The onboard camera detects visual features and formulates inequality constraints that aim to keep a sufficient number of features within the FoV, ensuring their forward invariance.

- A quadratic program (QP) solves for a new velocity (

v_filtered) that:- Remains close to

v_ref - Ensures the feature number score stays above a threshold

- Remains close to

🧩 Key Challenge and Our Solution

Traditional safety filtering methods, such as those based on Control Barrier Functions (CBFs), are designed to prevent the robot from violating hard safety constraints (e.g., collisions). However, directly applying such rigid constraints to feature visibility would over-constrain the system—preventing the robot from exploring, even when new features might become visible from newly observed regions.

Conversely, blindly following the reference command may cause the robot to lose too many features, degrading localization performance.

To resolve this trade-off, we propose a parameterized safety filter where each visual feature is assigned a parameter that reflects its importance. This parameter:

- Allows the filter to relax the constraint when a feature is less critical (parameter near zero), enabling the robot to let it move out of the FoV in favor of exploration.

- Enforces the constraint more strictly when the feature is important (parameter close to one), keeping it in view.

This behavior is embedded within a QP formulation that guarantees recursive feasibility—ensuring that a safe solution exists at every time step.

For a rigorous mathematical treatment, please refer to our paper.

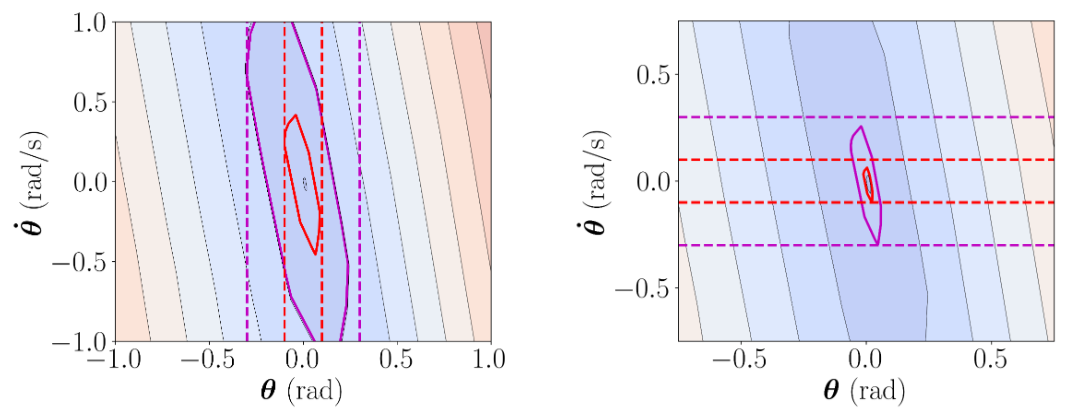

✅ Simulation:

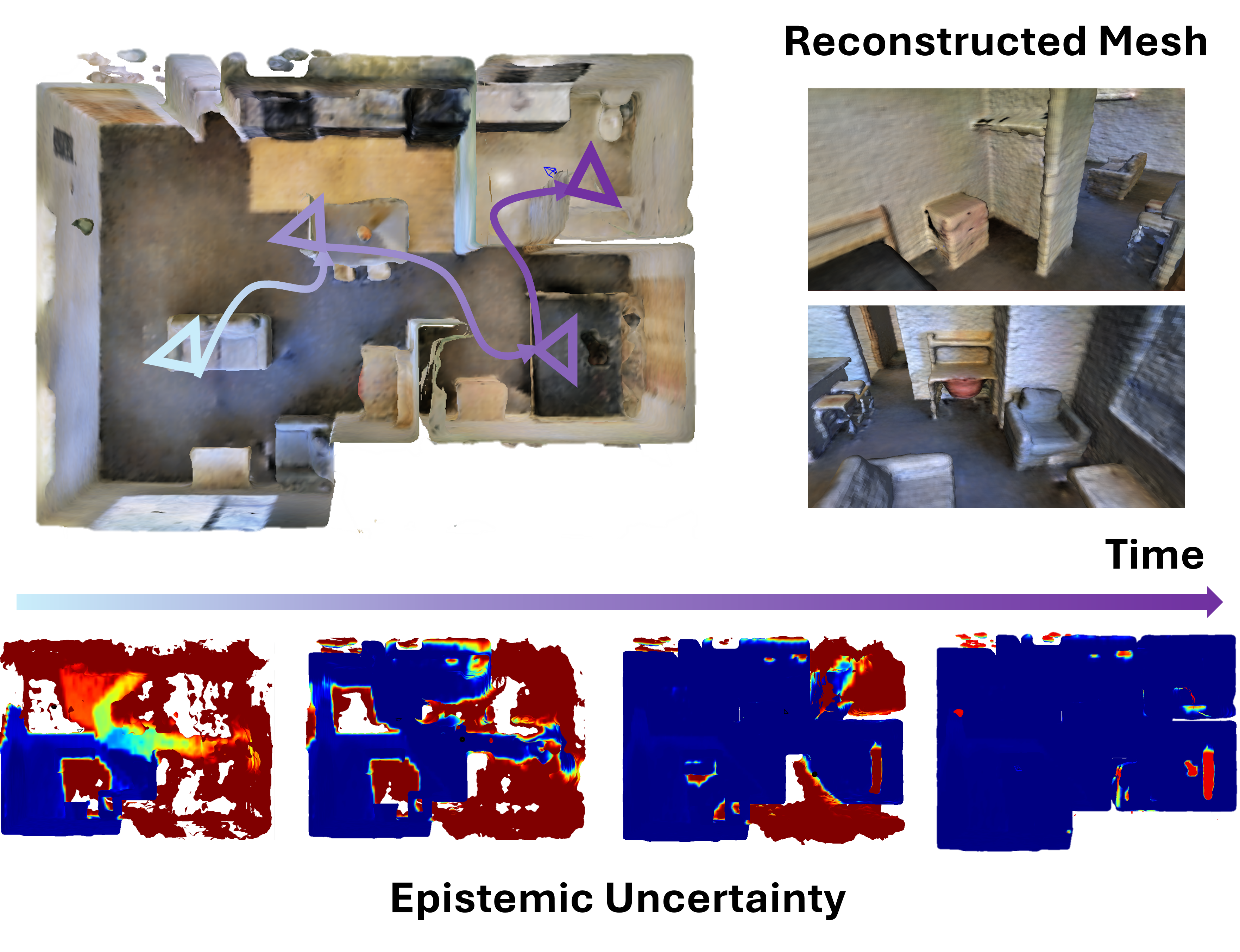

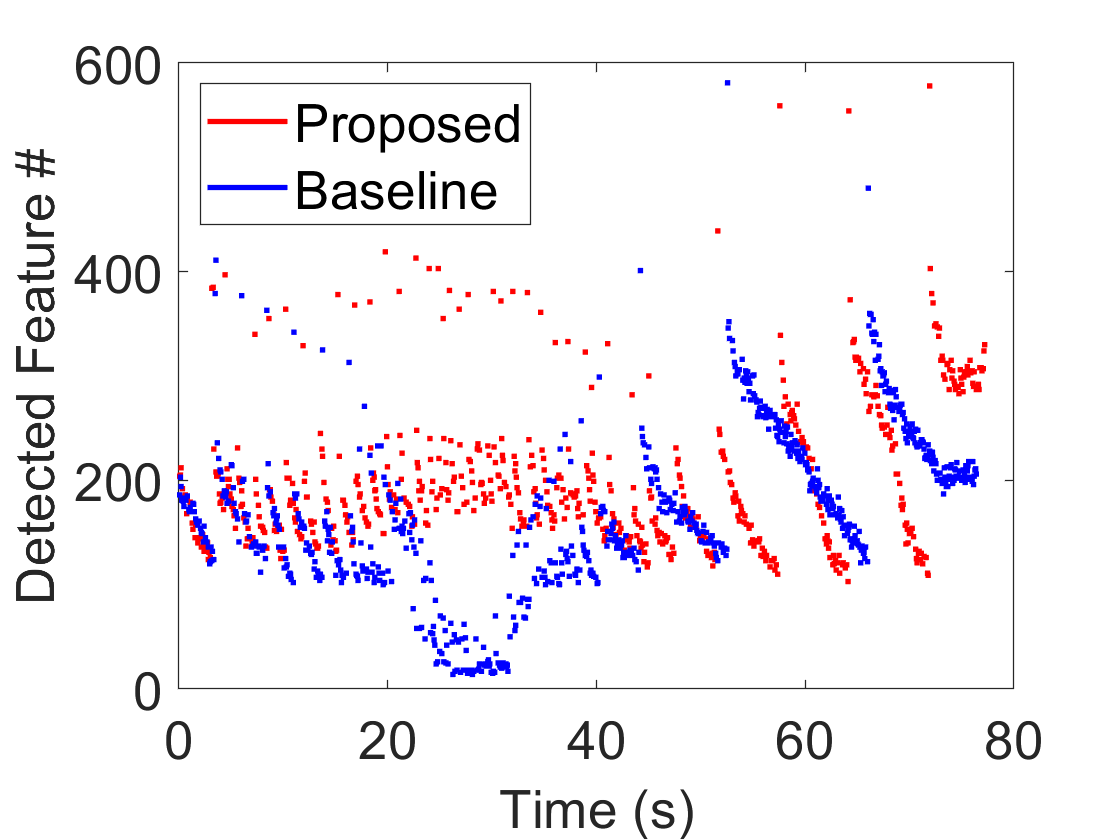

- We tested in a simulated setup with a robot commanded to revolve in a circle (fixed orientation).

- Without the filter, the robot frequently lost visual features.

- With the filter, it adaptively slowed or adjusted its path to maintain feature visibility.

Baseline Result

Proposed Result

🧪 Experiments

- We ran real-world experiments using a ground robot equipped with a stereo camera and a servo motor for active camera orientation.

- The task: traverse alongside a wall, keeping the camera facing perpendicular.

- Without the filter, the robot entered feature-poor regions and suffered tracking loss.

- With the filter, it proactively adjusted orientation to preserve feature visibility and only returned to the reference path once new features were found.

Baseline

Onboard View

Proposed

Onboard View

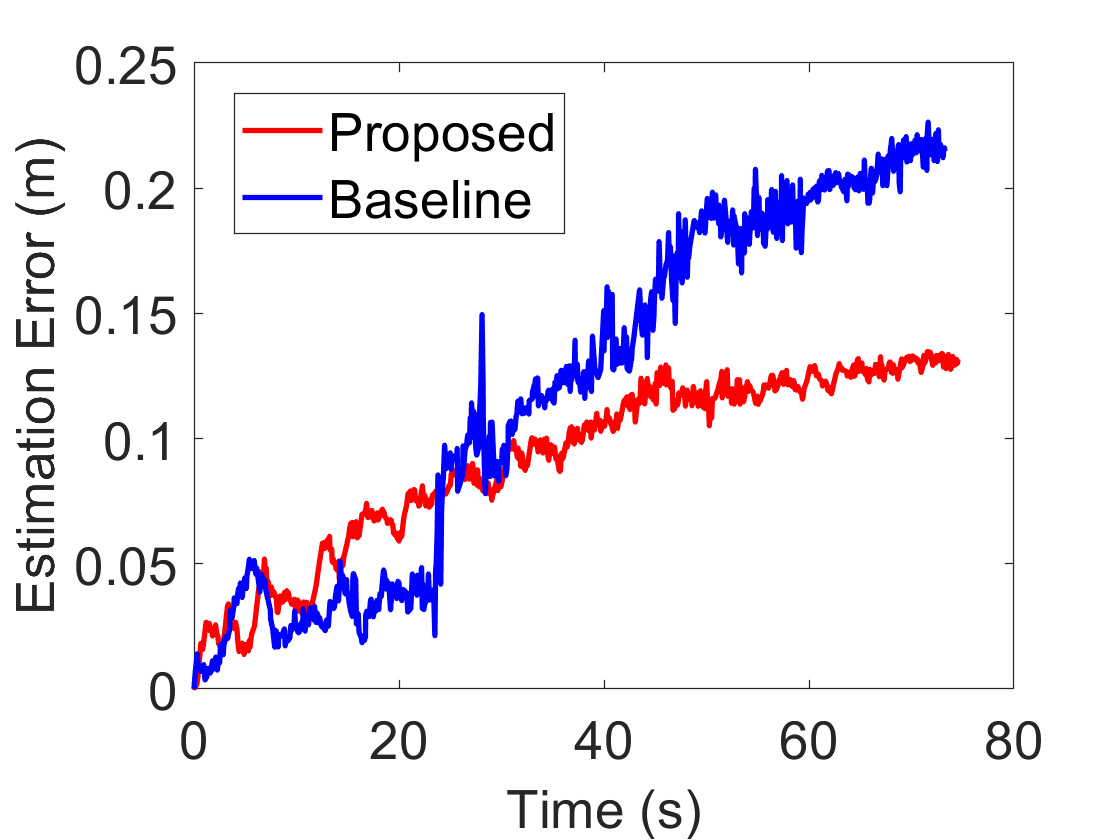

- The plot below shows that without the filter, a sudden drop in tracked features (left) leads to a sharp rise in localization error (right).

- With the filter, the feature count remains stable and drift increases more gradually.

🧩 Future Ideas

We’re excited to:

- Combine this with perception-aware planning to guide robots toward feature-rich regions, completing the full visual navigation stack.

- Extend it to autonomous exploration, enabling robots to safely explore unknown environments without losing visual features.

Bibtex

@article{kim2025enhancing,

title={Enhancing Feature Tracking Reliability for Visual Navigation using Real-Time Safety Filter},

author={Kim, Dabin and Jang, Inkyu and Han, Youngsoo and Hwang, Sunwoo and Kim, H Jin},

journal={arXiv preprint arXiv:2502.01092},

year={2025}

}